Google's ability to identify spam is being hampered by the proliferation of AI-generated, mass-produced material.

Google now has challenges in determining what constitutes high-quality content due to AI-generated material.

Nonetheless, there are signs that Google is strengthening its algorithmic recognition of subpar AI content.

Spammy AI content all over the web

It's not necessary to be an SEO expert to understand that, within the past 12 months, generative AI content has started to appear in Google search results.

Over that period, Google's perspective on material generated by AI changed. From "it's spam and breaks our guidelines" to "our focus is on the quality of content, rather than how content is produced," was the official stance before.

I have little doubt that many internal SEO slides proposing AI-generated content strategies included Google's emphasis on quality. Without a doubt, Google's position gave managers at many companies just enough leeway to approve it.

As a result, the internet is overflowing with low-quality, AI-generated content. Additionally, a portion of it originally appeared in the company's search results.

Invisible junk

The portion of the internet that search engines decide to index and display in search results is known as the "visible web."

According to Google's Pandu Nayak, based on Google antitrust trial testimony, we know from How Google Search and Ranking works that Google "only" keeps an index of about 400 billion documents. Trillions of papers are discovered by Google during crawling.

This indicates that just 4% (or 400 billion/10 trillion) of the documents that Google finds when crawling the web get indexed.

In 99% of query clicks, according to Google, searchers are protected against spam. Most of the stuff that isn't worth seeing has already been removed, if that is even somewhat true.

Content is king – and the algorithm is the Emperor’s new clothes

According to Google, it can accurately assess the quality of information. However, a lot of seasoned website managers and SEOs don't agree. The majority provide examples of how lower-quality material outranks higher-quality stuff.

Any trustworthy business that makes content investments is probably going to be among the top few percent of websites with "good" content. Presumably, its rivals will also be present. Google has already weeded out a huge number of unworthy applicants.

Google believes that it has performed admirably. An index was not created for 96% of the documents. Certain problems are easy for people to see but hard for a machine to identify.

Based on what I've seen, it appears that Google is strong at determining which pages are technically "good" or "bad," but not very good at separating amazing content from good material.

Google acknowledged as much in antitrust exhibits from the DOJ. As stated in a presentation from 2016, "We are not document literate. We pretend to be it.

Google relies on user interactions on SERPs to judge content quality

Google's assessment of how "good" a document's contents are is based on user interactions with SERPs. "Each searcher benefits from the responses of past users... and contributes responses that benefit future users," Google states later in the presentation.

There has always been a lot of discussion over the interaction data that Google uses to determine quality. I think Google determines the quality of material almost exclusively based on interactions from their SERPs rather than from websites. This eliminates the use of site-measured metrics such as bounce rate.

If you've been paying careful attention to those in the know, you'll notice that Google has been quite open about using click data to determine article ranking.

In 2016, at SMX West, Google engineer Paul Haahr gave a presentation titled "How Google Works: A Google Ranking Engineer's Story." Haahr discussed Google's search engine results pages and how it "looks for changes in click patterns." This user data is "harder to understand than you might expect," he continued.

The "Ranking for Research" presentation slide, which is included in the DOJ exhibits, further supports Haahr's statement:

Google's capacity to analyze user data and transform it into a useful form depends on its comprehension of the causal relationship between factors that change and the results that follow.

The only location where Google can determine which variables are present is in the SERPs. Website interactions add a plethora of variables that are hidden from Google's view.

It is arguable that identifying and measuring website interactions would be more challenging for Google than evaluating content quality. Nevertheless, the exponential growth of various variables would have a cascading effect, with each requiring minimum traffic thresholds to be met before significant inferences could be drawn.

Regarding the SERPs, Google admits in its docs that "increasing UX complexity makes feedback progressively hard to convert into accurate value judgments."

Brands and the cesspool

Google claims the "source of magic" in its ability to "fake" the understanding of texts is the "dialogue" that occurs between users and SERPs.

Google's patents contain hints about how it incorporates user involvement into rankings, beyond what is shown in the DOJ exhibits.

One that really interests me is the "Site quality score," which, to put it really simplistically, examines connections like:

- when website anchors contain brand or navigational phrases, or when searchers incorporate them into their query. For example, a link anchor or search query for "seo news searchengineland" as opposed to "seo news."

- when it appears as though consumers are choosing a particular SERP result.

These indications might suggest that a website is a very pertinent answer to the question. This quality assessment approach is consistent with Eric Schmidt's statement at Google that "brands are the solution."

Given studies demonstrating users' strong brand prejudice, this makes sense.

For example, according to a Red C poll, 82% of participants chose a brand they were already familiar with when asked to do a research assignment like looking for a party dress or booking a cruise vacation, regardless of where it ranked on the SERP.

It costs money to build brands and the recall they generate. That Google would use them to rank search results makes reasonable.

What does Google consider AI spam?

This year, Google released guidelines on artificial intelligence (AI)-generated material. These guidelines make reference to its Spam Policies, which characterize content as "intended to manipulate search results."

|

| Google Spam Policy |

Google defines spam as "Text generated through automated processes without regard for quality or user experience." This means that information is produced by AI systems without a human quality assurance process, in my opinion.

It's possible that generative AI systems will occasionally be taught using confidential or proprietary data. Its output could be set to be more predictable in order to minimize errors and hallucinations. One may contend that this is QA beforehand. It's probably an infrequent strategy.

I'll refer to everything else as "spam."

Before, only people with the technical know-how to create databases for madLibbing, scrape data, or use PHP to create text using Markov chains were capable of producing this kind of spam.

With a few simple instructions, an easy-to-use API, and OpenAI's laxly enforced Publication Policy, which reads as follows:

"Any reader could not fail to notice the clear disclosure of AI's role in content formulation, which is also easily comprehensible for the average reader."

|

| OpenAI’s Publication Policy |

There is a vast amount of AI-generated content being released online. When you type "regenerate response -chatgpt -results" into Google, tens of thousands of sites containing AI material produced "manually" (that is, without the use of an API) come up.

QA has frequently been so subpar that "authors" have copied and pasted "regenerate responses" from previous ChatGPT versions.

Patterns of AI content spam

I launched my first test website to explore how Google will respond to unedited AI-generated material when GPT-3 hit.

What I did was this:

- purchased a brand-new domain and installed WordPress in its most basic form.

- removed the top 10,000 Steam games in terms of sales.

- To obtain the questions that they are posing, feed these games into the AlsoAsked API.

- generated responses to these questions using GPT-3.

- Create a FAQPage structure for every query and response.

- retrieved the YouTube video's URL to incorporate into the page.

- For every game, create a page using the WordPress API.

The website was free of advertisements and other forms of revenue.

After a few hours, I had a brand-new 10,000-page website with some Q&A about well-known video games.

Over the course of three months, Bing and Google both devoured the information and indexed the majority of the sites. Google and Bing each received over 100 clicks a day at their height.

Test results:

After four months or so, Google opted not to rank some content, which caused traffic to drop by 25%.

Google ceased delivering traffic after a month.

During the whole time, Bing continued to deliver traffic.

What's the most fascinating thing? It doesn't seem like Google took any manual action. Google Search Console showed no notice of any kind, and the two-step drop in traffic made me doubt that there had been any human involvement.

Using only AI content, I've observed this pattern again and time again:

- Google indexes the site.

- Traffic is delivered quickly with steady gains week on week.

- Traffic then peaks, which is followed by a rapid decline.

Another illustration would be Casual.ai. In this "SEO heist," over 1,800 articles were produced using AI by scraping a competitor's sitemap. The same pattern was seen in the traffic, which increased for several months until halting, then dropped by about 25% before a crash almost completely destroyed the traffic.

|

| SISTRIX visibility data for Causal.app |

Because of all the press coverage it received, there is significant debate in the SEO industry as to whether or not this drop was the result of a manual intervention. I think the algorithm was in action.

An analogous, and possibly more fascinating, case study concerned the "collaborative" AI articles on LinkedIn. LinkedIn asked users to "collaborate" by adding, editing, and fact-checking these AI-generated articles. In appreciation for their work, "top contributors" received a LinkedIn badge.

Similar to the previous situations, traffic increased before declining. But LinkedIn continued to receive some visitors.

|

| SISTRIX visibility for LinkedIn /advice/ pages |

According to this statistics, algorithms rather than human intervention are responsible for traffic swings.

Certain collaborative articles on LinkedIn seemed to fit the definition of helpful material once they were revised by a human. Others, according to Google, weren't.

Perhaps Google is correct in this particular case.

If it’s spam, why does it rank at all?

From what I've observed, Google goes through several stages to determine ranking. Complexity-enhancing systems cannot be implemented because of time, cost, and restrictions on data access.

Although the review process for documents is ongoing, I think it takes longer for Google's algorithms to identify poor quality content. This explains why the pattern keeps coming up: material passes the initial "sniff test" but is later discovered.

Let's examine some of the supporting data for this assertion. We briefly discussed Google's "Site Quality" patent earlier in this piece, as well as how they use user interaction data to create this ranking score.

Newer websites haven't had any user interaction with the SERP material. Google is unable to access the content's quality.

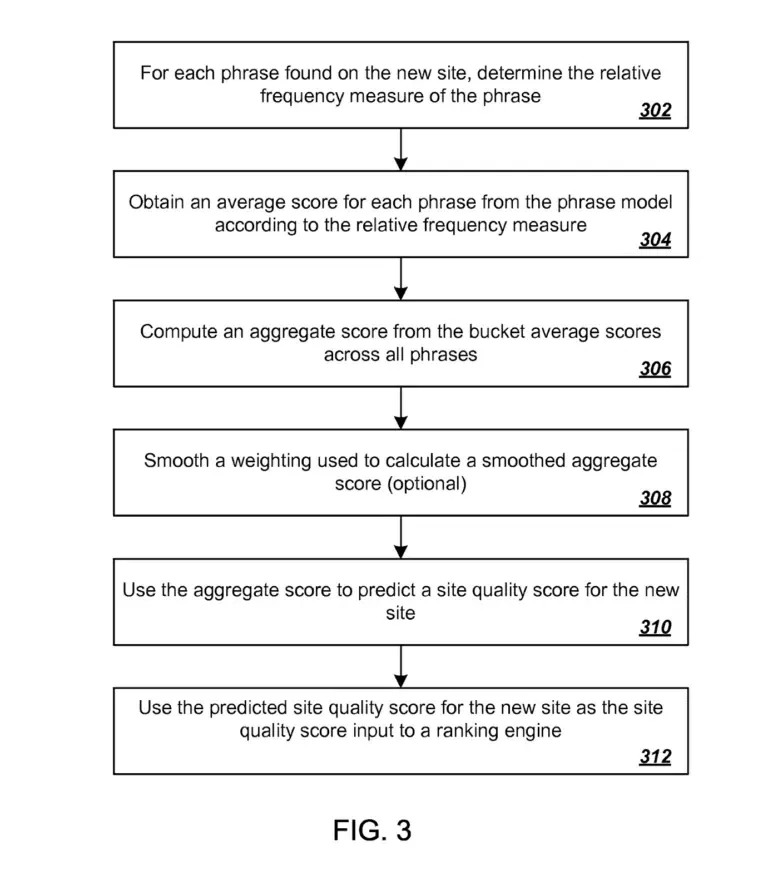

This is covered by another patent for Predicting Site Quality, though.

Once more, to put it extremely simplistically, the process of predicting a quality score for new websites involves first determining the relative frequency measure for every phrase that can be found on the site.

A previously created phrase model constructed from quality scores determined from previously scored sites is then used to map these metrics.

|

| Predicting Site Quality patent |

In the event that Google continued to use this—which I think they do, at least somewhat—many newly launched websites would be ranked using an algorithm that incorporates a quality metric as a "first guess." Afterwards, user engagement data is used to refine the ranking.

Like many of my colleagues, I've seen that Google occasionally raises a website's rating for what seems to be a "test period."

At the time, our hypothesis was that a measurement was being made to evaluate if user interaction matched what Google had predicted. If not, traffic decreased as fast as it increased. If it did well, it would keep its favorable ranking on the SERP.

"Implicit user feedback" is mentioned in a number of Google patents, including the following frank statement:

"Rank modifier engines, which employ implicit user feedback to reorder search results and enhance the final ranking displayed to users, can be incorporated into ranking sub-systems.”

Back in 2015, AJ Kohn wrote a detailed piece regarding this type of data.

It is important to remember that this is just one of many outdated patents. Google has created a number of innovative solutions after this patent was published, including:

- Google's "new" query processing engine, RankBrain, has been mentioned explicitly.

- Google's primary weapon against webspam is SpamBrain.

Google: Mind the gap

Apart from Google engineers who have direct experience, I don't think anyone understands how much user/SERP interaction data would be applied to specific sites as opposed to the SERP as a whole.

However, it is known that contemporary systems like RankBrain are taught, at least in part, using click data from users.

AJ Kohn's review of the DOJ testimony on these new technologies caught my attention for another reason. He composes:

Moving a batch of documents from the "green ring to the "blue ring" is mentioned several times. All of these allude to a paper that I haven't been able to find yet. Nonetheless, the evidence appears to illustrate how Google narrows down results from a big set to a more manageable one so that further ranking factors may be applied.

This validates my theory on sniff tests. A website that passes is sent to a new "ring" for more thorough analysis, either in terms of computing or time, in order to increase accuracy.

I think the current state of affairs is as follows:

- The production and distribution of AI-generated content cannot be kept up with by Google's present ranking algorithms.

- Gen-AI systems pass Google's "sniff tests" and rank till more research is done since they generate grammatically sound and generally "sensible" material.

This is where the issue lies: there is a never-ending backlog of websites waiting for Google to conduct its preliminary assessment because of the speed at which generative AI content is being produced.

An HCU hop to UGC to beat the GPT?

I think Google is aware of this as a significant obstacle. If I may venture a wild guess, it's probable that this vulnerability has been addressed by recent Google updates, including the helpful content update (HCU).

It goes without saying that user-generated content (UGC) websites like Reddit benefited from the HCU and "hidden gems" systems.

Reddit was already among the most popular sites on the internet. Its search exposure has more than doubled as a result of recent Google modifications, at the expense of other websites.

Because most content posted on UGC sites is regulated, my conspiracy theory is that these sites—with a few notable exceptions—are among the least likely places to locate mass-produced AI content.

Even though these search results might not be "perfect," there's a chance that browsing through some unfiltered user content will make you happier overall than Google constantly ranking whatever ChatGPT last vomited online.

UGC may be prioritized as a temporary solution to improve quality, as Google is unable to respond quickly enough to AI spam.

What does Google’s long-term plan look like for AI spam?

Eric Lehman, a former employee of Google for 17 years who worked as a software engineer on search quality and ranking, provided a significant amount of testimony regarding the company during the DOJ trial.

Lehman's assertions that Google's machine learning systems, BERT and MUM, are becoming more significant than user data were one recurrent topic. Because of their immense capability, it's possible that Google will rely more on them than on user data in the future.

Search engines offer a good proxy for making decisions: slices of user interaction data. The challenge is gathering data quickly enough to keep up with changes, which is why some systems use alternative techniques.

Let's say Google is able to significantly increase the accuracy of their first content parsing by utilizing innovations like BERT in the construction of their models. If that's the case, they might be able to narrow the difference and cut down on how long it takes to find and filter spam.

There is an exploitable issue with this one. The more people look for possibilities that require little work but yield big benefits, the more pressure there is on Google to fix its flaws.

Paradoxically, a system that becomes successful in large-scale spam prevention of a particular kind may become nearly obsolete when participation opportunities and incentives decline.

Thanks for reading my article 🙏.